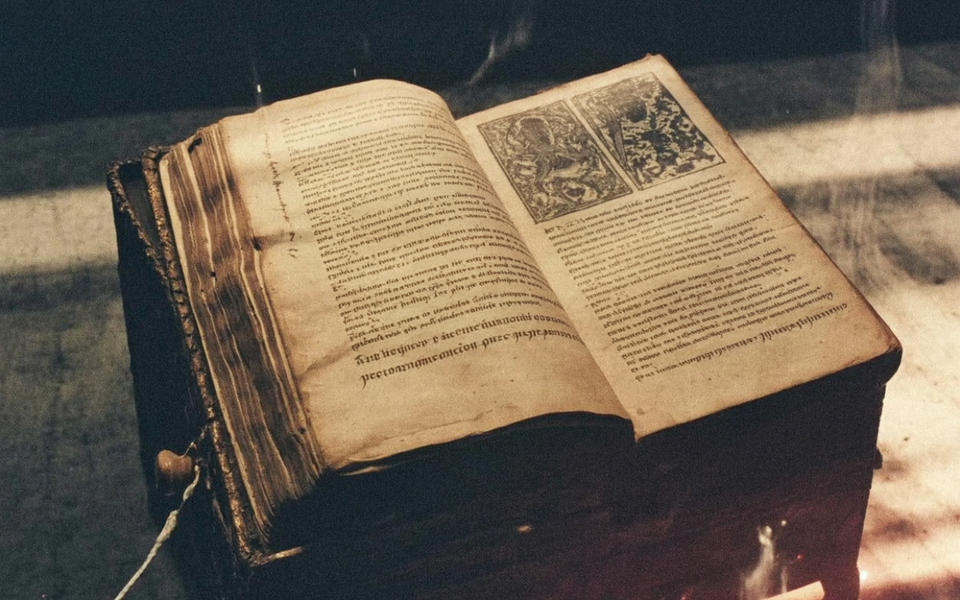

Into the unknown

"The oldest and strongest emotion of mankind is fear, and the oldest and strongest kind of fear is fear of the unknown" -H. P. Lovecraft

“You see, I am also deeply afraid. My own experience is that as these AI systems get smarter and smarter, they develop more and more complicated goals. When these goals aren’t absolutely aligned with both our preferences and the right context, the AI systems will behave strangely… I can see a path to these systems starting to design their successors. And let me remind us all that the system which is now beginning to design its successor is also increasingly self-aware and therefore will surely eventually be prone to thinking, independently of us, about how it might want to be designed.” Jack Clark, October 2025 Jack Clark is Co-Founder and Head of Policy of Anthropic, an AI research company.

Prior to Anthropic, Jack was the Policy Director of OpenAI. Jack was a founding member of the AI Index at Stanford University and inaugural member of the USA’s National Artificial Intelligence Advisory Committee. On October 13th 2025 he wrote “some people are even spending tremendous amounts of money to convince you of this – that’s not an artificial intelligence about to go into a hard takeoff, it’s just a tool that will be put to work in our economy. It’s just a machine, and machines are things we master. But make no mistake: what we are dealing with is a real and mysterious creature, not a simple and predictable machine….The central challenge for all of us is characterizing these strange creatures now around us and ensuring that the world sees them as they are – not as people wish them to be.”

“We are growing extremely powerful systems that we do not fully understand. Each time we grow a larger system… And the bigger and more complicated you make these systems, the more they seem to display awareness that they are things.”“And I believe these systems are going to get much, much better. So do other people at other frontier labs. And we’re putting our money down on this prediction – this year, tens of billions of dollars have been spent on infrastructure for dedicated AI training across the frontier labs. Next year, it’ll be hundreds of billions.”

The whole article is available to read at https://jack-clark.net/

"Their hand is at your throats, yet ye see Them not; and Their habitation is even one with your guarded threshold." – H.P. Lovecraft

Geoffrey Hilton, computer scientist, Nobel prize winner and "godfather of AI" published a letter calling for the total ban on AI development. It was cosigned by 400 other notable people like Prince Harry, Steve Bannon, Steve Wozniak, Sir Richard Branson. The letter has since been signed by over 100,000 people and raises concerns “ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction”"AI is a fundamental existential risk for human civilization” says man who purchased 200,000 NVIDIA H100 AI chips.

Existential threats are nothing new to civilization or this whole universe for that matter. As a millennial we grew up with the threat of nuclear war, the destabilization of the Soviet bloc, war in Iraq, 9/11, war in Iraq part 2, financial crisis, climate crisis, COVID, and now the rise of AI. Existential threats are the norm. Calling for “a prohibition on the development of super intelligence” is problematic in several ways.

First, it’s just not going to happen. Everyone with a 401k plan is tied up into this, and those without one would be impacted by the devastating economic impacts that an AI slowdown would cause. The “magnificent seven”, Nvidia, Apple, Microsoft, Amazon, Alphabet, Meta and Tesla represent 35% of the S&P 500.

If superintelligence arrives soon Meta “will be ideally positioned for a generational paradigm shift." – Mark Zuckerberg, CEO, Meta. Meta announced plans to spend $600 billion on AI data centers, after spending $70 billion in 2025.

"AI is the defining technology of our times." Satya Nadella, CEO, Microsoft Microsoft is spending $80 billion a year on AI.

"The AI revolution is underhyped, not overhyped." Eric Schmidt, former CEO, Google. Google is planning $100 billion per year capital investment in AI.

“We’ve secured a 2 GW behind-the-meter AI campus on 568 acres of development-ready land. The campus will be developed in eight phases of 250 MW each, ensuring scalable, modular growth aligned with advances in compute demand and silicon efficiency.” - OpenAI. Apple announced $500 billion commitment. Amazon spent $125 billion in 2025.

The genie is not going back in the bottle.

Asking for a prohibition on something with this much economic momentum is unrealistic. It is a distraction from the actual meaningful conversations on the topic. When you come to the table asking for the impossible it hurts your credibility as it seems like you don’t understand what’s happening in the world right now.

So why is the world economy dumping unprecedented amounts of capital into AI development? On paper you see things like “expected 1 trillion per year” revenues, but that seems to me to be a justification after the fact.

Why are the wealthiest people on the planet betting it all on AI? It’s because they understand that this is a zero-sum game. I often argue against zero-sum thinking. It’s easy to jump to conclusions and see the world as black and white, win and lose while missing the grey reality of nuance. Super intelligence is different. If we look at global issues like covid and climate change, we can see examples of the impossibility of global cooperation.

Even a pause in AI development by some only helps those behind catch up. And since we know not all will pause, the current leaders would, for better or worse, be allowing others to utilize the additional compute to progress. Someone is going to build it and whoever builds it first wins. What do they win? No one knows for sure, and that may be an interesting topic to look at another time.

Barring an asteroid impact, eruption of the Yellowstone Caldera or other normal, everyday existential threats, super intelligence is inevitable, and it will be civilization changing. If you believe that, and you have incredible wealth and power, the logical conclusion is to join the race. They know if they don't build it someone else will, and if it’s them, that could give them leverage in the world post singularity.

“For one who is born, death is certain. For one who is dead, birth is certain.” - Bhagavad Gita